What do you do when the administration steps in?

The administration removed my posters after survey results were critical of the school. Here's what I learned from the experience.

Let me start out this post with some background:

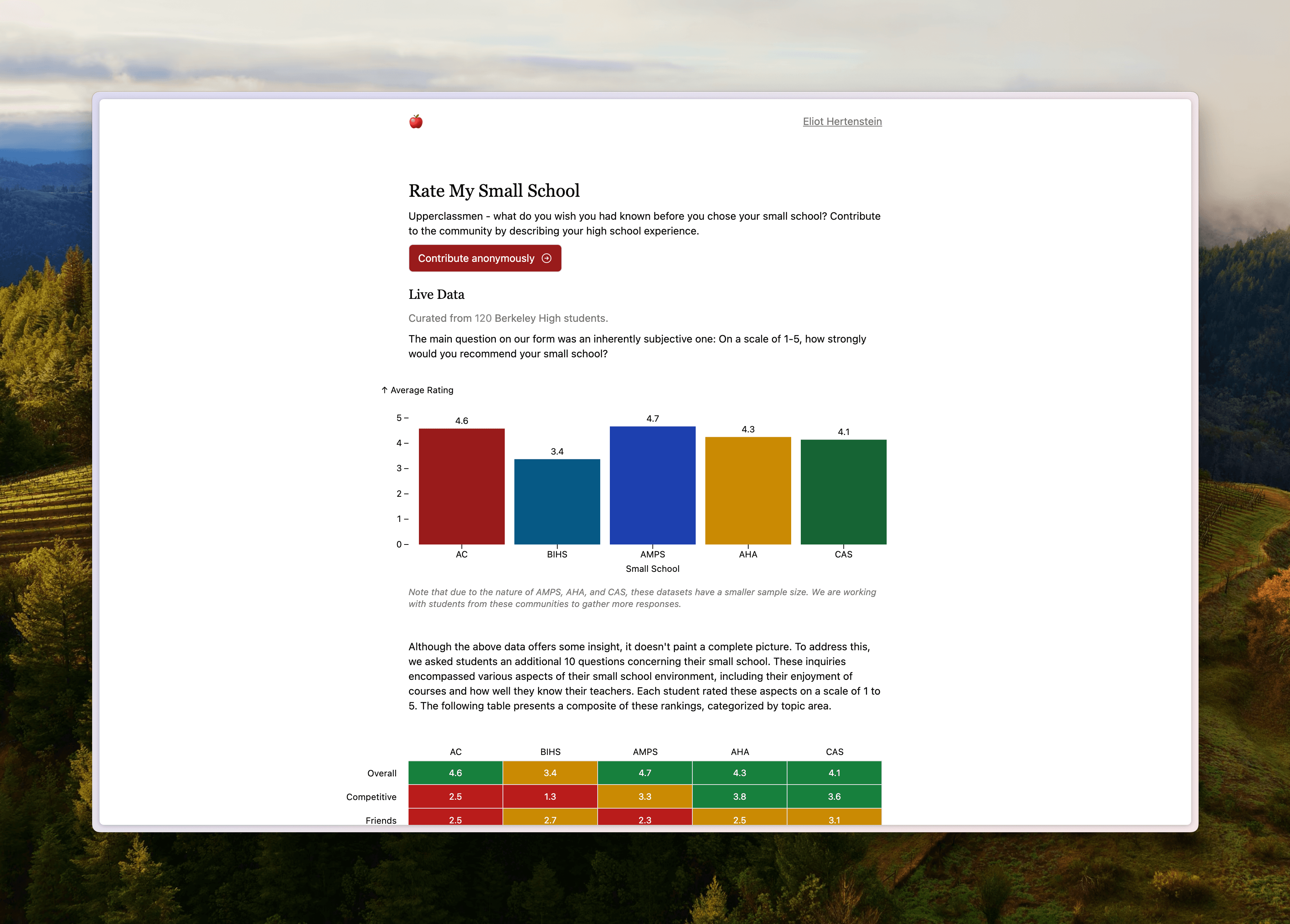

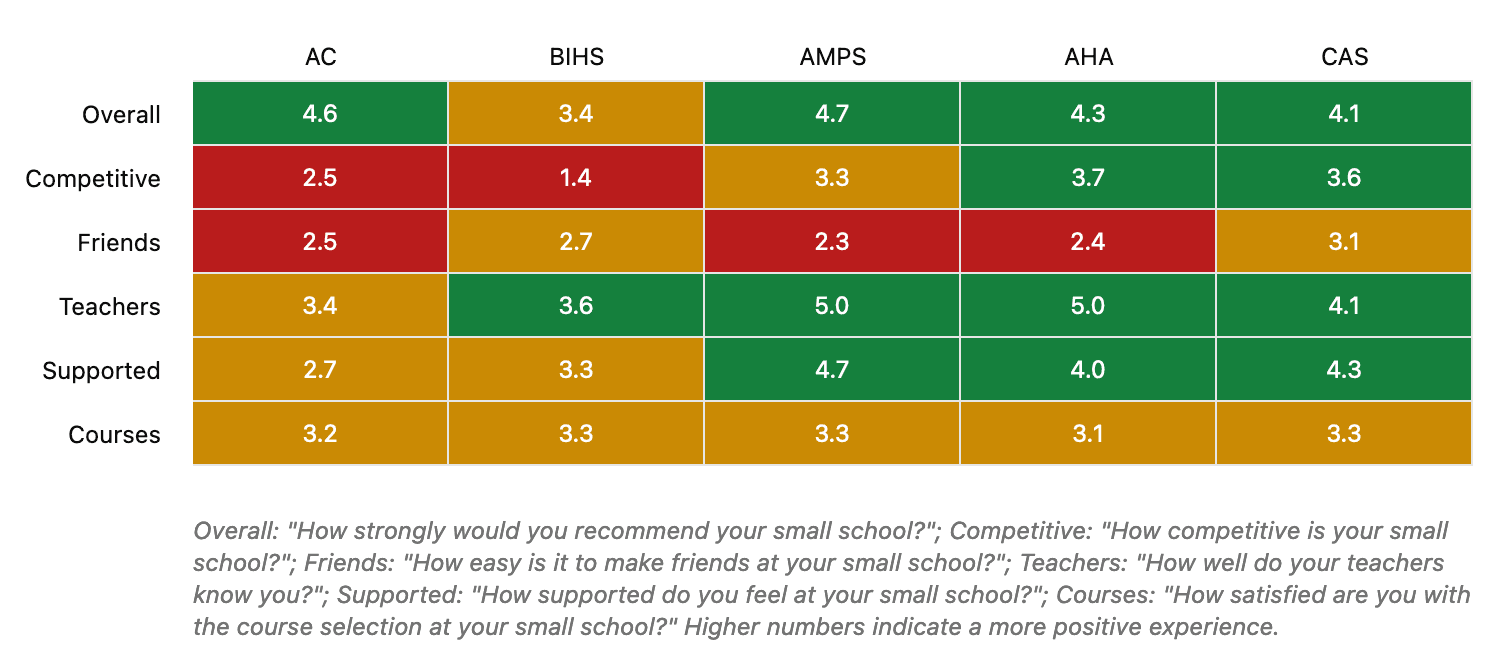

Every year at my high school, each freshman decides what "small school" to self-select into: AC (Academic Choice, or the traditional high-school experience with AP classes), BIHS (Berkeley International High School, a more "international-focused" school with a built-in IB program), AMPS (the Academy of Medicine and Public Service, designed for students interested in the medical field), AHA (the Arts and Humanities Academy for an arts-focused curriculum) and CAS (Community Arts and Sciences, the community surrounding social justice and communication). I am in BIHS. If this sounds confusing, try thinking about it from the perspective of a freshman who has no idea what they want to do in life.

So, as a passion project, I built Rate My Small School. The idea was simple: a survey that upperclassmen could fill out to speak about the experience in their small school. I had two key principles in mind:

- Surveys should be independent of the district. Current surveys are usually run by the small schools themselves, or by the administration, meaning that students can be hesitant to share information critical of the way that the school is run.

- The data should be live. Usually, when this sort of information is collected, it takes a while to get to the students who need it, or never makes it to students at all. I wanted to make sure that when someone fills out the survey, they can see the immediate impact of their response.

As the project started to pick up steam, I noticed a trend: the smaller schools had a lot less responses, even when adjusted for school population. I figured this was an issue of reach, and so decided to take the usual route for school projects: hanging up posters.

The Technical Details

If you're only interested in the problems that I ran into with the school administration, feel free to skip to the next section. However, I still think the process of getting the posters setup was interesting in itself.

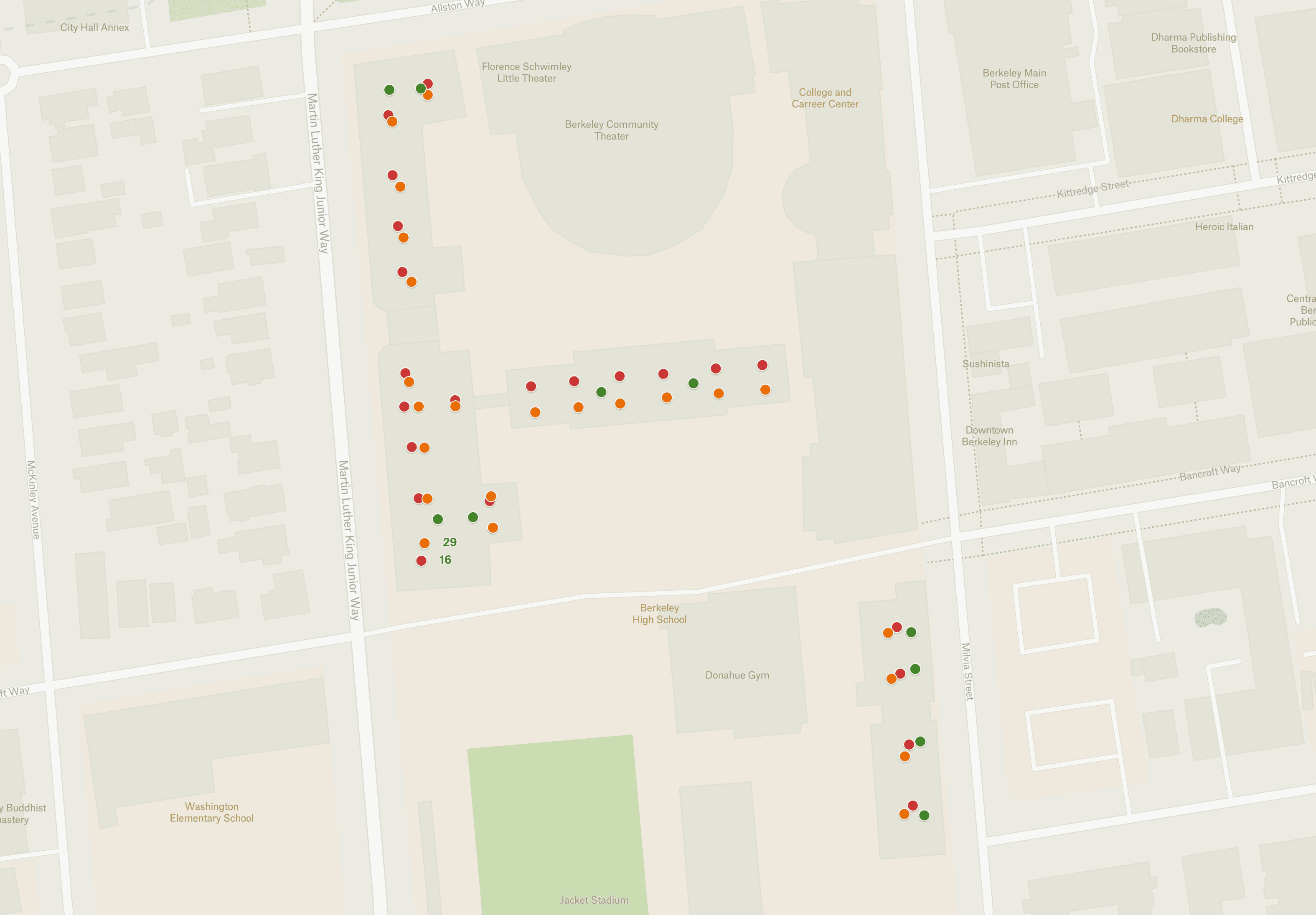

Inspired by previous projects like this one from my friend Jerome, I decided that the posters would be dual-purpose: first, to promote the project, but secondly, to gather data on the optimal to hang posters at my school. The framework here is relatively simple: generate a custom QR code for each poster, and then track whenever it was scanned.

First, how do we actually generate the slugs in the first place? Rather than create an entirely separate script, I chose to integrate the tracking directly into the Rate My Small School website. Each poster would use a link with the ?qr parameter attached. The website, which was written in Next.js, could then intercept that parameter in the middleware and log the QR code that was scanned to a database.

export const middleware = async (req: NextRequest) => {

if (req.nextUrl.pathname === "/") {

const qr = req.nextUrl.searchParams.get("qr");

const poster = qr ? posters.find((poster) => poster.hash === qr) : null;

const agent = userAgent(req);

const time = new Date().toISOString();

const ip =

req.headers.get("x-real-ip") || req.headers.get("x-forwarded-for");

if (poster) {

await supabase.from("qr").insert({

time,

qr,

poster: poster.id,

agent: agent.ua,

ip,

});

const response = NextResponse.next();

response.cookies.set("poster", poster.id);

return response;

}

return NextResponse.next();

}

};

Integrating this into the application also had a secondary benefit. Although the responses were anonymous, I could still store a cookie on the browser with the ID of the scanned QR code. Then, if the user ended up submitting the form, I would be able to see which students were submitting the QR code from which locations, and hopefully optimize my poster placement in the future.

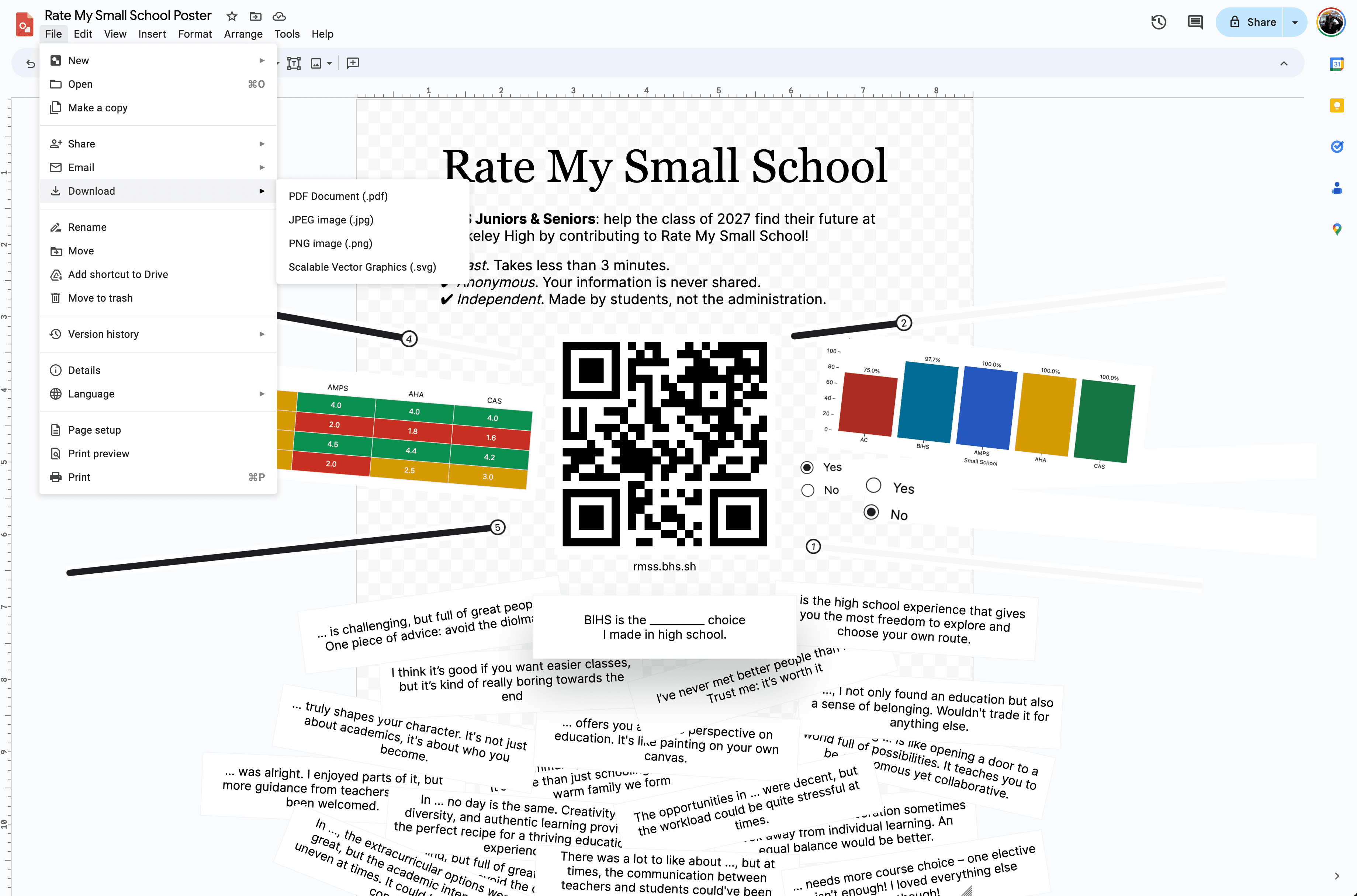

However, there was still one issue: how would I actually get the QR codes into the posters? I had designed the initial poster in Google Drawings (because it was easy) which conveniently allowed me to export the poster as an SVG. Then, using a qr-code generation library and a short typescript script, I could generate the base64 image of the QR code and embed it directly into the SVG.

The final step was to go hang the posters. Here, I created a map with the ID of each poster, organized so that I could move from hanging one poster to another. The goal was to cover most of the school's student buildings, which resulted in a total of 55 posters spread across campus.

Usually, this would be the point in this sort of article where I'd present my findings. While I'm still committed to compiling the data I have, the administration got involved.

The Administration

About four school days after hanging up the first half of the posters, I was briefly pulled out of class by the vice principal of BIHS. After explaining the project, I was essentially told the following:

- Surveys of students are generally conducted by the administration.

- Students could've mistaken that my survey was being conducted by the school.

- I wasn't in any trouble.

- Disruptive posters are removed by the administration.

- The administration was taking down my posters.

When I asked further questions, I was told the administration had the jurisdiction to remove any posters they wanted from school property, and was "working on" a policy explicitly outlining what was and was not allowed.

In my opinion, the claims made by the administration were questionable. The goal of the survey was to collect data independent of the administration, as clearly stated on the posters. It seems unlikley that that students would mistake the project for something created by the district. Additionally, the idea that these sorts of surveys could only be conducted by the administration means that useful, unfavorable data would never be shared with students. This is already a problem: I know many people who regret their small school choice, and felt misinformed by district-supplied materials. By removing the posters, the district only makes it harder for the survey to reach smaller schools like AMPS, AHA, or CAS, and collect accurate, actionable data.

The statement that "disruptive posters will be removed" also doesn't align with past precedent. When students hung posters announcing a school-wide walk-out, the administration allowed them to stay up, despite the activity directly disrupting classes. The district has also never attempted to prevent polling done by the student newspaper, which is also independent of the administration.

Finally, I don't think that the administration should have complete jurisdiction over what stays up and what comes down. On principle, this allows for the district to silence any criticism as material that is "disruptive" or "inappropriate." While I understand the need for some guidelines, a blanket "we can take down whatever we want" policy seems counterproductive, especially at a school with such a rich history of student activism.

So what prompted administration's actions? My best guess is the data published from the start, which was critical of some small schools. My small school (BIHS) was rated lower than almost every other school by almost 1 point out of 5. There were also some other negative statistics: around 20% of AC students said they had a teacher who did not know their name, compared to 5% of BIHS students. The administration, which does a great deal of work controlling the messaging surrounding small schools, may have seen this as a threat and tried to shut down the project, rather than actually address the issues raised by students.

I do want to give the administration credit for keeping an open dialog going; according to district policy, they had no obligation to tell me why the posters were taken down, and are (or at least seem to be) open to feedback. I also think in comparison to many other school districts, their reaction was fairly tame. However, I wish there had been more of this dialogue before the posters were removed, rather than after. I would appreciate opportunities to incorporate feedback on issues with the project, or my methodology, rather than a blanket stop on on-campus promotion.

What Comes Next?

To be honest, I'm really not sure. For one, I don't plan on taking down the site, or stopping the survey. I think the data gathered so far is still useful, and still want to get a more diverse range of opinions. I also might try to process the data that I have on the QR codes, just for fun.

Otherwise, I hope to discuss more with the administration about creating policies that benefit students. At least for now, I have the hope that the district is acting in good faith, and truly does value student opinions. If the administration does end up providing more information or comments in the future, then I am committed to featuring that here. If not, then I hope the data speaks for itself.